Russell Coker: IMA/EVM Certificates

I ve been experimenting with IMA/EVM. Here is the Sourceforge page for the upstream project [1]. The aim of that project is to check hashes and maybe public key signatures on files before performing read/exec type operations on them. It can be used as the next logical step from booting a signed kernel with TPM. I am a long way from getting that sort of thing going, just getting the kernel to boot and load keys is my current challenge and isn t helped due to the lack of documentation on error messages. This blog post started as a way of documenting the error messages so future people who google errors can get a useful result. I am not trying to document everything, just help people get through some of the first problems.

I am using Debian for my work, but some of this will apply to other distributions (particularly the kernel error messages). The Debian distribution has the ima-evm-utils but no other support for IMA/EVM. To get this going in Debian you need to compile your own kernel with IMA support and then boot it with kernel command-line options to enable IMA, in recent kernels that includes lsm=integrity as a mandatory requirement to prevent a kernel Oops after mounting the initrd (there is already a patch to fix this).

If you want to just use IMA (not get involved in development) then a good option would be to use RHEL (here is their documentation) [2] or SUSE (here is their documentation) [3]. Note that both RHEL and SUSE use older kernels so their documentation WILL lead you astray if you try and use the latest kernel.org kernel.

The Debian initrd

I created a script named /etc/initramfs-tools/hooks/keys with the following contents to copy the key(s) from /etc/keys to the initrd where the kernel will load it/them. The kernel configuration determines whether x509_evm.der or x509_ima.der (or maybe both) is loaded. I haven t yet worked out which key is needed when.

[ 1.063689] integrity: Problem loading X.509 certificate -74 Error -74 means -EBADMSG, which means there s something wrong with the certificate file. I have got that from /etc/keys/x509_ima.der not being in der format and I have got it from a der file that contained a key pair that wasn t signed.

#!/bin/bash mkdir -p $ DESTDIR /etc/keys cp /etc/keys/* $ DESTDIR /etc/keysMaking the Keys

#!/bin/sh

GENKEY=ima.genkey

cat << __EOF__ >$GENKEY

[ req ]

default_bits = 1024

distinguished_name = req_distinguished_name

prompt = no

string_mask = utf8only

x509_extensions = v3_usr

[ req_distinguished_name ]

O = hostname

CN = whoami signing key

emailAddress = whoami @ hostname

[ v3_usr ]

basicConstraints=critical,CA:FALSE

#basicConstraints=CA:FALSE

keyUsage=digitalSignature

#keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid

#authorityKeyIdentifier=keyid,issuer

__EOF__

openssl req -new -nodes -utf8 -sha1 -days 365 -batch -config $GENKEY \

-out csr_ima.pem -keyout privkey_ima.pem

openssl x509 -req -in csr_ima.pem -days 365 -extfile $GENKEY -extensions v3_usr \

-CA ~/kern/linux-5.11.14/certs/signing_key.pem -CAkey ~/kern/linux-5.11.14/certs/signing_key.pem -CAcreateserial \

-outform DER -out x509_evm.der

To get the below result I used the above script to generate a key, it is the /usr/share/doc/ima-evm-utils/examples/ima-genkey.sh script from the ima-evm-utils package but changed to use the key generated from kernel compilation to sign it. You can copy the files in the certs directory from one kernel build tree to another to have the same certificate and use the same initrd configuration. After generating the key I copied x509_evm.der to /etc/keys on the target host and built the initrd before rebooting.

[ 1.050321] integrity: Loading X.509 certificate: /etc/keys/x509_evm.der [ 1.092560] integrity: Loaded X.509 cert 'xev: etbe signing key: 99d4fa9051e2c178017180df5fcc6e5dbd8bb606'Errors Here are some of the kernel error messages I received along with my best interpretation of what they mean. [ 1.062031] integrity: Loading X.509 certificate: /etc/keys/x509_ima.der

[ 1.063689] integrity: Problem loading X.509 certificate -74 Error -74 means -EBADMSG, which means there s something wrong with the certificate file. I have got that from /etc/keys/x509_ima.der not being in der format and I have got it from a der file that contained a key pair that wasn t signed.

[ 1.049170] integrity: Loading X.509 certificate: /etc/keys/x509_ima.der [ 1.093092] integrity: Problem loading X.509 certificate -126Error -126 means -ENOKEY, so the key wasn t in the file or the key wasn t signed by the kernel signing key.

[ 1.074759] integrity: Unable to open file: /etc/keys/x509_evm.der (-2)Error -2 means -ENOENT, so the file wasn t found on the initrd. Note that it does NOT look at the root filesystem. References

Debian has work-in-progress packages for Kubernetes, which work well enough enough for a testing and learning environement. Bootstraping a cluster with the

Debian has work-in-progress packages for Kubernetes, which work well enough enough for a testing and learning environement. Bootstraping a cluster with the

Release 0.3.4 of the

Release 0.3.4 of the  (Blogging this, since this is a recurring anti-pattern I noticed at several customers and often comes up during deployments of 3rd party repositories.)

Update on 2021-02-19: clarified, that Signed-By requires apt >= 1.1, thanks Vincent Bernat

Many upstream projects provide Debian repository instructions like this:

(Blogging this, since this is a recurring anti-pattern I noticed at several customers and often comes up during deployments of 3rd party repositories.)

Update on 2021-02-19: clarified, that Signed-By requires apt >= 1.1, thanks Vincent Bernat

Many upstream projects provide Debian repository instructions like this:

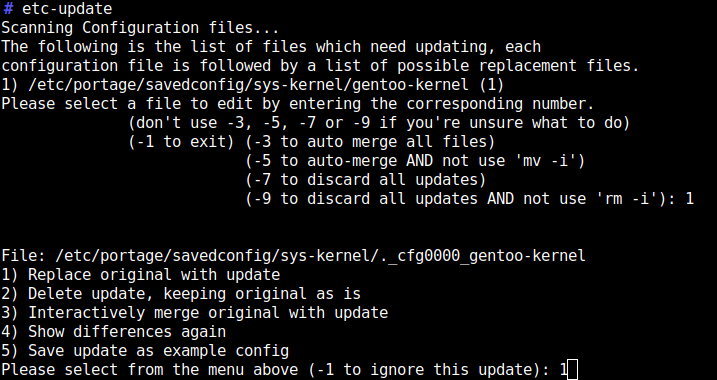

The first install of a Gentoo kernel needs to be somewhat manual if you want to optimize the kernel for the (virtual) system it boots on.

In

The first install of a Gentoo kernel needs to be somewhat manual if you want to optimize the kernel for the (virtual) system it boots on.

In  The kernel should auto-build once new versions become available via portage.

Again the

The kernel should auto-build once new versions become available via portage.

Again the

I have an original

I have an original  My maybe most impactful piece of code is

My maybe most impactful piece of code is  The thirteenth release of

The thirteenth release of